When creating Devsnap I was pretty naive. I used create-react-app for my frontend and Go with GraphQL for my backend. A classic SPA with client side rendering.

I knew for that kind of site I would have Google to index a lot of pages, but I wasn't worried, since I knew Google Bot is rendering JavaScript by now and would index it just fine

Oh boy, was I wrong.

At first, everything was fine. Google was indexing the pages bit by bit and I got the first organic traffic.

But somehow indexing was really slow. Google was only indexing ~2 pages per minute. I thought it would speed up after some time, but I it wasn't, so I needed to do something, since my website relies on Google to index a lot of pages fast.

1. Enter SSR

I started by implementing SSR, because I stumbled across some quote from a Googler, stating that client side rendered websites have to get indexed twice. The Google Bot first looks at the initial HTML and immediately follows all the links it can find. The second time, after it has sent everything to the renderer, which returns the final HTML. That is not only very costly for Google, but also slow. That's why I decided I wanted Google Bot to have all the links in the initial HTML.

I was doing that, by following this fantastic guide. I thought it would take me days to implement SSR, but it actually only took a few hours and the result was very nice.

Without SSR I was stuck at around 20k pages indexed, but now it was steadily growing to >100k.

But it was still not fast enough

Google was not indexing more pages, but it was still too slow. If I ever wanted to get those 250k pages indexed and new job postings discovered fast, I needed to do more.

2. Enter dynamic Sitemap

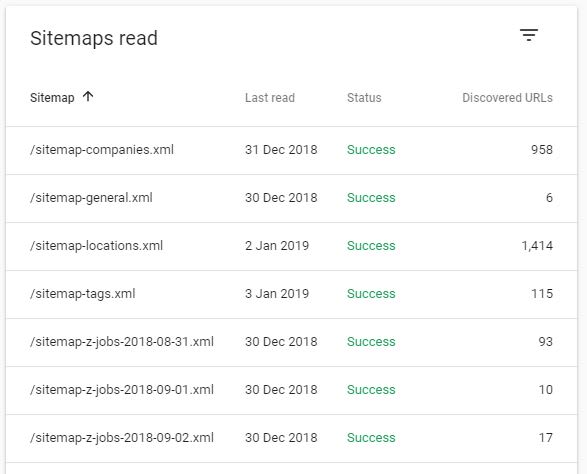

With a site of that size, I figured I'd have to guide Google somehow. I couldn't just rely on Google to crawl everything bit by bit. That's why I created a small service in Go that would create a new Sitemap two times a day and upload it to my CDN.

Since sitemaps are limited to 50k pages, I had to split it up and focused on only the pages that had relevant content.

After submitting it, Google instantly started to crawl faster.

But it was still not fast enough

I noticed the Google Bot was hitting my site faster, but it was still only 5-10 times per minute. I don't really have an indexing comparison to #1 here, since I started implementing #3 just a day later.

3. Enter removing JavaScript

I was thinking why it was still so slow. I mean, there are other websites out there with a lot of pages as well and they somehow managed too.

That's when I thought about the statement of #1. It is reasonable that Google only allocates a specific amount of resources to each website for indexing and my website was still very costly, because even though Google was seeing all the links in the initial HTML, it still had to send it to the renderer to make sure there wasn't anything to index left. It simply doesn't know everything was already in the initial HTML when there is still JavaScript left.

So all I did was removing the JavaScript for Bots.

if(isBot(req)) {

completeHtml = completeHtml.replace(/<script[^>]*>(?:(?!<\/script>)[^])*<\/script>/g, "")

}

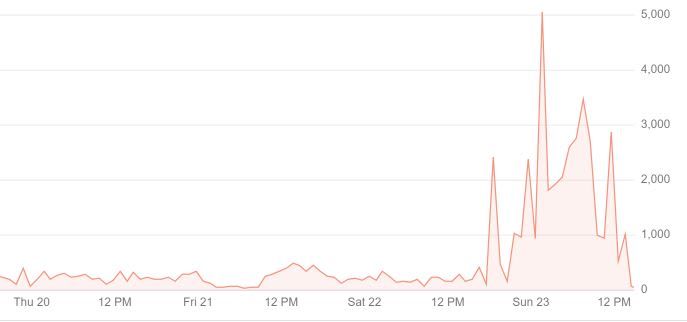

Immediately after deploying that change the Google Bot went crazy. It was now crawling 5-10 pages - not per minute - per second.

And this is the current result. Since I implemented the changes just recently, there are still a lot of pages that have been crawled, but haven't been indexed yet (grey bars). Nevertheless I'm more than happy with the result.

After having Google index all the pages, the performance went up a lot as well.

Conclusion

If you want to have Google index a big website, only feed it the final HTML and remove all the JavaScript (except for inline Schema-JS of course).